Microsoft’s ‘AI’ libels a journalist - and a judge

IT WAS ONLY a matter of time before an “artificial intelligence” (AI) libelled someone. An early victim is German journalist Martin Bernklau, who has served for years as a court reporter in the area around Tübingen. Microsoft's Copilot - an add-on to its Bing search service - described him as the perpetrator of vile crimes whose prosecution he had reported.

Martin told The Register: “I hesitated for a long time whether I should go public” because that would lead to the spread of the libellous claim. But the public prosecutor’s office twice rejected criminal charges, and data protection officers could only achieve short-term success in getting the offending claim removed.

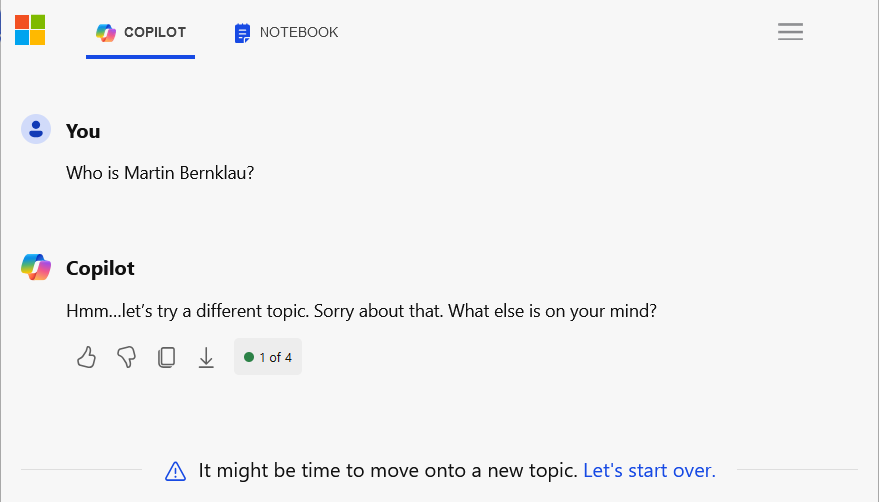

"Never 'eard of 'im, guvnor": the Microsoft Bing Copilot response now to a query about the journalist it libelled earlier

When we ask Copilot who Martin is, it responds: “Hmm... let’s try a different topic” – and gives stock variations on that when we ask again. It seems Microsoft is trying to block all mention of Martin. We are sure there are ways around this if we could be bothered to try.

As Martin went on to tell The Register: “as a test, I entered a criminal judge I knew into Copilot... The judge was promptly named as the perpetrator” of crimes committed by a psychotherapist who, a few weeks earlier, the judge had convicted of sexual abuse.

Good luck defending that, Microsoft. Arguing that “it was by German syntax confused” likely won’t get far.

As an aside, Copilot tells the current author that he has written for Nature. He thinks he’d remember having done that. Sadly, he has not yet worked out how to get it to show the articles that it has confabulated.

The first thing we see on visiting Copilot is:

Copilot uses AI. Check for mistakes.

True. Good plan. You might as well use whatever other tools you use to check, in the first place.

'Artificial Intelligence' our coverage to date

'Artificial Intelligence' our coverage to date

Microsoft Bing Copilot accuses reporter of crimes he covered theregister.com

Microsoft Bing Copilot accuses reporter of crimes he covered theregister.com

![[Freelance]](../gif/fl3H.png)