What does it mean that machine-learning confabulates?

Is ‘artificial intelligence’ always wrong?

ONE MEMBER was recently called away from their sub-editing shift to a management presentation encouraging journalists to think about using "artificial intelligence" tools in their work. Could we be using such systems as ChatGPT to draft Tweets, or standfirsts, or headlines?

A poster for the conference on Deliberation, Interpretation, and Confabulation at the VU University Amsterdam on 19 and 20 June 2015

"But," the member piped up, "AI is always wrong." Not always, the presenter demurred. "OK. Consider the AI we already use - machine translation. It does the typing for us en Français, par example. But then we must assume that it is wrong." We must check every word to be sure that it hasn't got the wrong end of the collant. Otherwise, we will definitely be severely embraced sooner or later.

The thing is, once more, that "artificial intelligence" is a marketing term. What we have here are machine learning systems. They ingest vast quantities of text. Then they encode the patterns in that text - often called the "data-set" on which they are "trained". You ask them a question. Based on those patterns, they confabulate.

Confabu-what? Have you ever encountered a person with dementia, trying to explain, for example, how the flowers on their bedside table arrived? They may well tell a coherent and even convincing story about what shop they bought them in - despite the evidence that they haven't been outdoors for months. That's confabulation.

The human mind demands that there be stories. As an analogy, tinnitus - ringing in the ears - can happen to someone who cannot hear certain frequencies: the brain fills them in. Confabulation can happen when the mind does not have details - it fills them in. Systems such as ChatGPT have a similar imperative to produce responses. They fill in what they don't "know" - what is not literally present in the training data - producing stuff that's reminiscent of the training data.

The main feature of ChatGPT output is what US satirist Stephen Colbert first called, in 2005, "truthiness". It has the stylistic attributes of true statements.

How can we be sure these statements are true? By considering well-studied fields of knowledge.

Skilled users can push such a system to exhibit even more truthiness - for example by demanding that it provide references for its claims, as would a scientific paper.

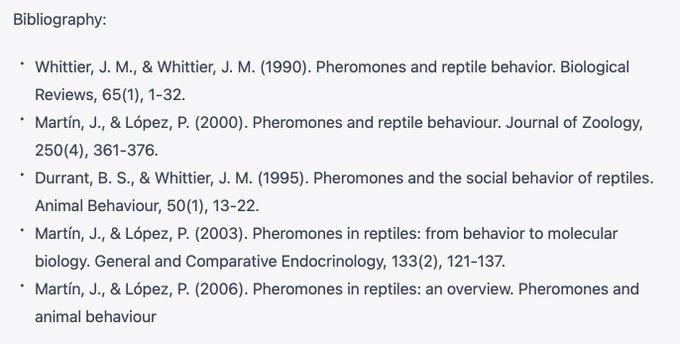

And, what do you know? ChatGPT will make up references to non-existent academic papers. There are many examples: we take one at random here. Matthew Cobb is Professor of Zoology at the University of Manchester. He asked ChatGPT to produce a university-level essay on pheromones in reptiles. It did. And it produced this:

Fake references generated by ChatGPT

The above confabulation is in its way extremely impressive. The journals exist. The authors exist, and publish on pheromones (substances whose smells communicate). But the paper titles, dates and page numbers are made up. Strangely, Pheromones and Animal Behaviour is a real book, but it's a 2003 monograph by Tristram D Wyatt, not by Martín and López.

There's much more to consider. Some of it would take us deep into the weeds of the philosophy of knowledge. ChatGPT is only a beginning: future systems will exhibit much more truthiness. It is possible that the results will start to illustrate the fallibility of human knowledge as much as that of machines' pretence of knowledge.

The take-home message will, though, not change: as a journalist, take nothing at face value. Check the sources for everything.

And what if in the near future the internet search tool that you use to do that checking is powered by machine learning of the kind that confabulates? Will newsrooms and freelances need to rebuild their libraries of actual, pre-AI books?

6 June 2025 We have an example in the wild of this kind of confabulation being used by the US administration to justify harmful policy.

Making America groan again 'AI' making policy once more

Making America groan again 'AI' making policy once more

19 June 2025 The publisher mentioned above whose enthusiasm for 'AI' prompted this piece has issued a memo to journalists and, with added emphasis, to marketing folk. Summarising by hand: “do not use AI without treble-checking”.

And another thing, or two...

There are of course questions about whether that ingestion of training data is a breach of copyright.

And a note for sub-editors: it is usually misleading to refer to a machine learning system as an "algorithm". I, at least, would like to save "algorithm" to name deterministic processes whose workings can be traced. One of the features of machine learning is that the patterns it encodes cannot be traced. There are algorithms that build the pattern encoding, but they are not where the outputs come from.

Using 'AI' is never safe research shows - and legality update 26/10/24

Using 'AI' is never safe research shows - and legality update 26/10/24

Can AI assist journalists? Branch meeting Nov 2018

Can AI assist journalists? Branch meeting Nov 2018

Having trouble with that phrase? Try this... an AI subs' aid - Feb 2023

Having trouble with that phrase? Try this... an AI subs' aid - Feb 2023

'Artificial Intelligence' our coverage to date

'Artificial Intelligence' our coverage to date

![[Freelance]](../gif/fl3H.png)